Ethics of Generative AI: Best Practices for Responsible Use in the Netherlands

(Ethiek van Generatieve AI: Beste Praktijken voor Verantwoord Gebruik in Nederland)

Generative AI has shifted from novelty to necessity, powering everything from automated news to hyper-personalised marketing. In a country that prizes gegevensbescherming (data protection) and digitale transparantie (digital transparency), Dutch businesses must ask: how do we deploy these tools without eroding public trust? Recent moves by government, universities, and startups show the Netherlands is ready to fuse innovation with ethics—yet shared standards and enforceable safeguards are still taking shape.

The Dutch Context (De Nederlandse Context)

Ethical technology is embedded in Dutch policy and public expectation. The GDPR already mandates transparency and consent, but generative models that create text, images, or decisions without direct authorship expose fresh accountability gaps. A 2024 survey by the Rathenau Instituut found Dutch citizens demand strict boundaries for AI in education, justice, and journalism, popularising the term vertrouwenstechnologie (trust technology) as a business edge rather than a compliance chore.

Three Pillars of Responsible Use (Drie Pijlers van Verantwoord Gebruik)

Transparantie requires disclosing how AI outputs are formed—especially when they sway public opinion or medical advice.

Aansprakelijkheid means traceable responsibility; the Dutch AI Coalition urges auditable models and named data stewards.

Inclusiviteit demands training data that reflects every Dutch community—from regional dialects to minority cultures—so systems don’t reinforce bias.

Practical Risks and Dutch Safeguards (Praktische Risico’s & Nederlandse Waarborgen)

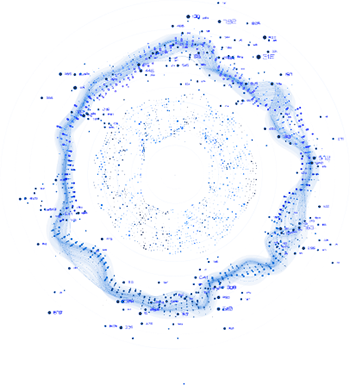

Synthetic misinformation (synthetische desinformatie) threatens elections and public safety. The Netherlands Forensic Institute is building detectors for AI-generated fakes used in court or journalism. Bias is equally urgent: 2023 research at TU Delft showed image generators defaulting to white male doctors and female cleaners of colour. Embracing ethiek-by-design—dataset audits, fairness checks, and user-level content filters—can tackle such issues upstream.

Dutch Industry Leaders (Nederlandse Koplopers)

Digital publisher Blendle requires all AI-suggested headlines to pass human review, while Amsterdam agency DEPT® runs an internal ethics board auditing generative campaigns pre-launch. These practices show responsible AI can coexist with rapid product cycles when governance lives in daily workflows.

Government & EU Governance (Overheid en EU-Governance)

The Netherlands is piloting a national AI-register aligned with the forthcoming EU AI Act. High-risk systems—healthcare triage, autonomous transport, public-sector decisions—must document training data, purpose, and oversight. For generative AI, the Act drafts require synthetic-content labelling and a mandatory human-in-the-loop for sensitive use cases. Dutch firms that build compliance dashboards now will be ready when the Act lands.

Education & Public Awareness (Onderwijs & Publiek Bewustzijn)

Funded by SIDN Fonds, school programmes teach teens to spot deepfakes and question algorithms. Leiden University now embeds ethics modules across tech and humanities degrees, producing graduates fluent in both code and conscience.

Conclusion: A Dutch Blueprint for Trustworthy AI (Een Nederlandse Blauwdruk voor Betrouwbare AI)

Generative AI unlocks creativity and efficiency for Dutch organisations—but only when paired with transparency, accountability and bias mitigation. By embedding ethiek-by-design, complying with GDPR and the EU AI Act, and ensuring inclusive data practices, you turn responsible innovation into a strategic voordeel. Ready to build trustworthy AI? Neem contact op met Pyeclipse for uw ethics audit en implementatieroadmap.